Adding search to a site or application can greatly impact how easy it is to navigate. This has a positive impact on how many pages users may navigate and the time they spend on pages because they can find content that is relevant to what they are looking for. This post discusses some of the design decisions I made when determining how to add search to this site. It also touches on some research I did when trying to approach this problem. To see how I implemented it jump to part 2.

Background

I started pretty broad with definitions and worked progressively narrower towards examples that best fit my use case.

What is Search?

Search is one of many kinds of information retrieval systems. Any information retrieval aims to provide the searcher with information relevant to what they were looking for, usually to accomplish a broader goal or information-seeking behavior. Information retrieval is a subject that leans heavily on the formalism of many other subjects, including data structures and algorithms, linear algebra, probability theory, human interaction design, and natural language processing.

Search UX

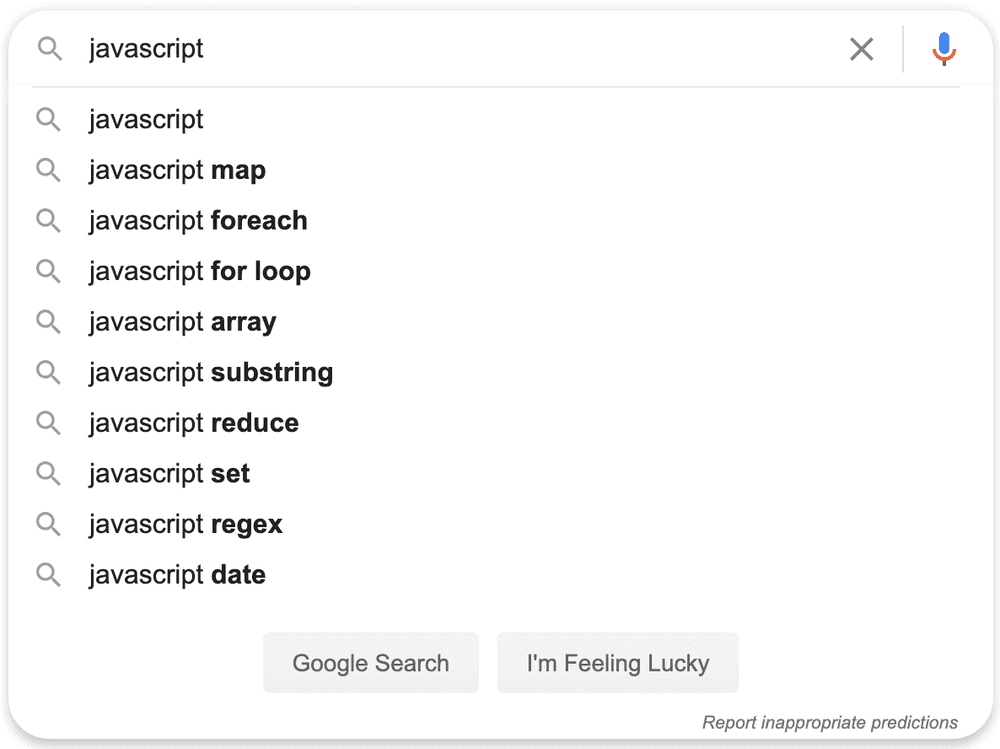

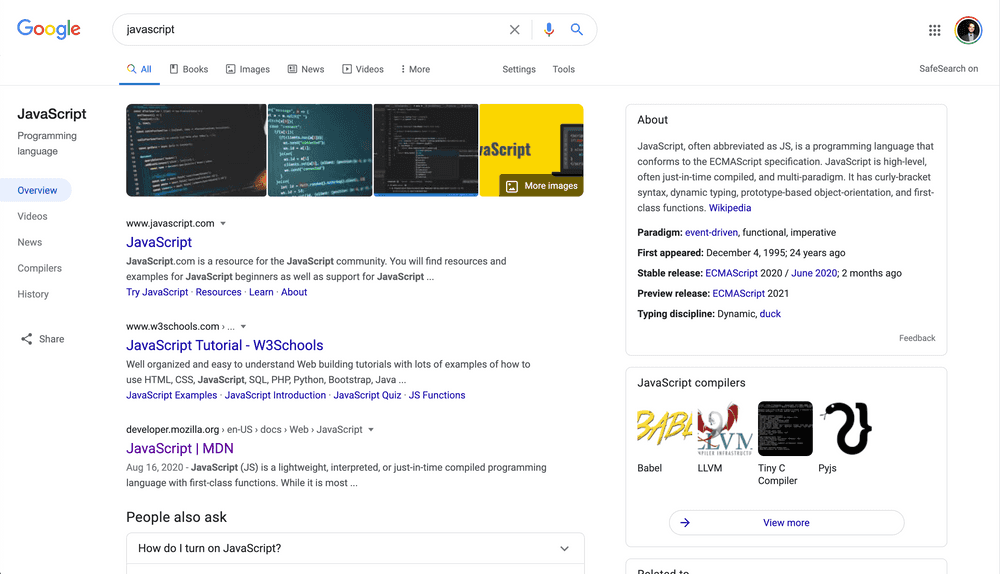

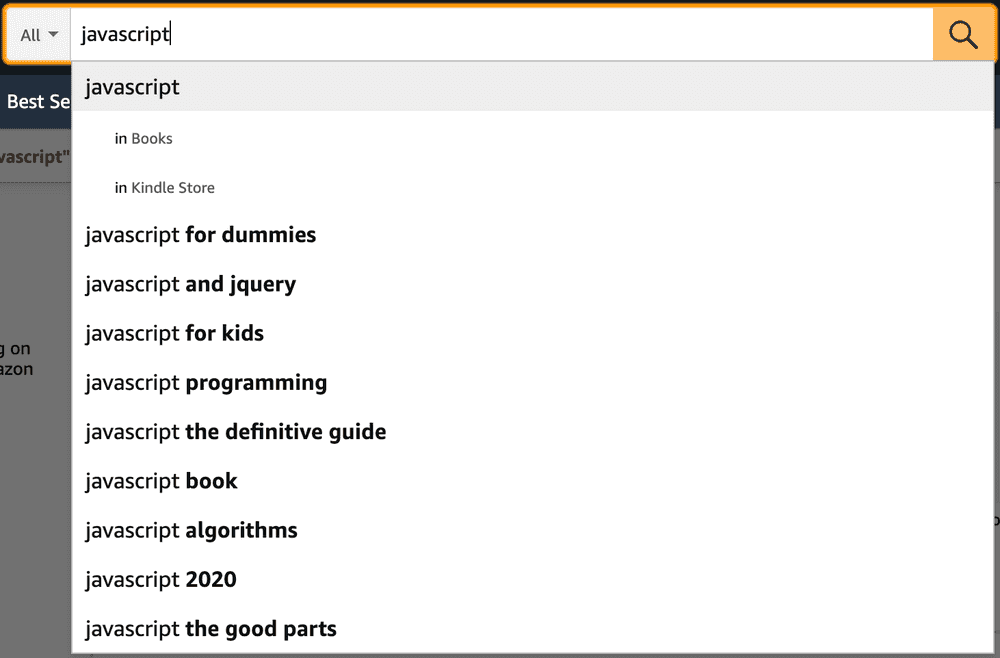

Autocomplete is ubiquitous when it comes to search these days. Users expect possible searches and content to be suggested to them as they type. Autocomplete is frequently seen in two primary forms, one where it’s used to suggest searches and take users to a search page with more detailed results. The other main approach is where the suggestions are of actual content and choosing a suggestion takes users directly to that content.

Autocomplete as an entry to a search results page

This works well when there are a lot of results and a lot of different kinds of results. The canonical example is Google. The results page has rich content that is tailored to the query.

Another example of this type of search experience is Amazon. The results page on Amazon is faceted and allows users to filter and narrow their results.

Autocomplete as the entire search experience

An alternative user experience is to have autocomplete suggest results instead of queries. The big difference here is when a user picks a suggestion, it takes them directly to the result instead of using the suggestion to perform a search, as we saw with Google and Amazon.

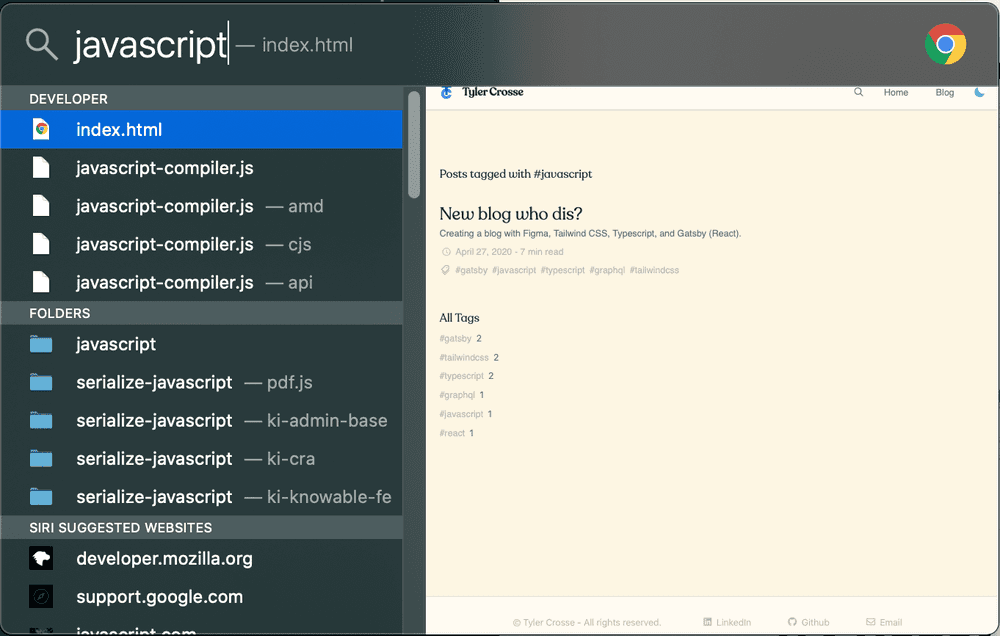

The Spotlight Search on OSX is a great example. It provides suggested results across many different categories of content. I’ve chosen to gloss over the other fantastic things Spotlight also does, like being able to do math and provide interactive previews of content, all of which feel out of scope for my search feature.

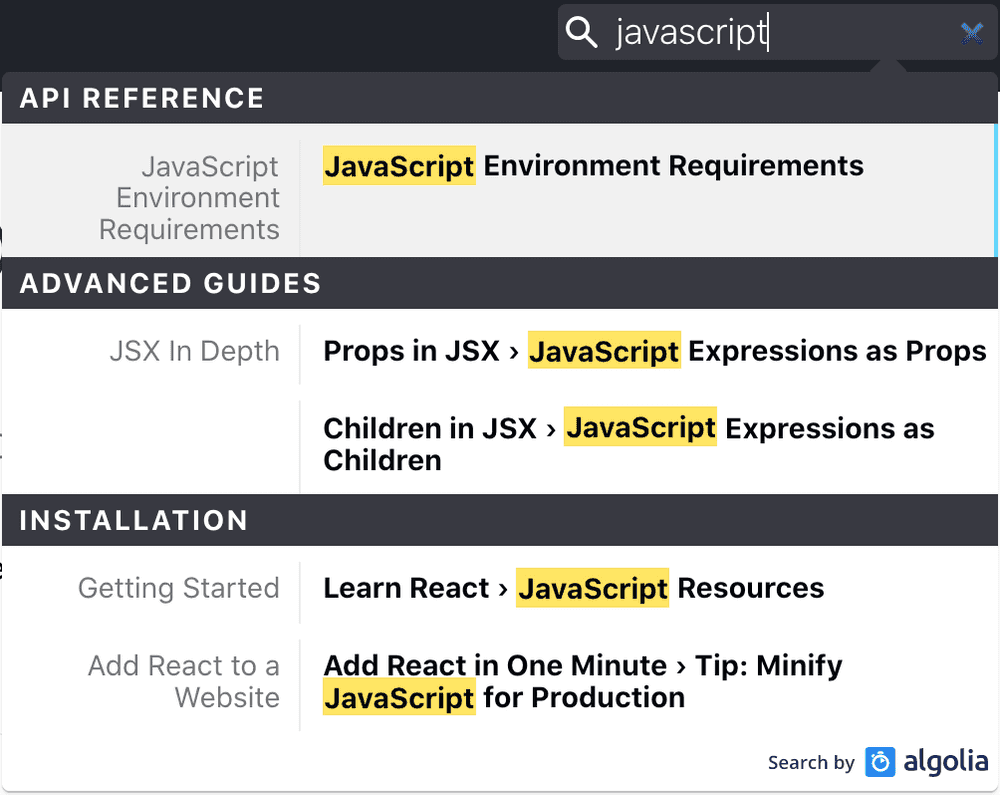

Algolia Docsearch is another example of this flavor of search UX that most developers are likely familiar with.

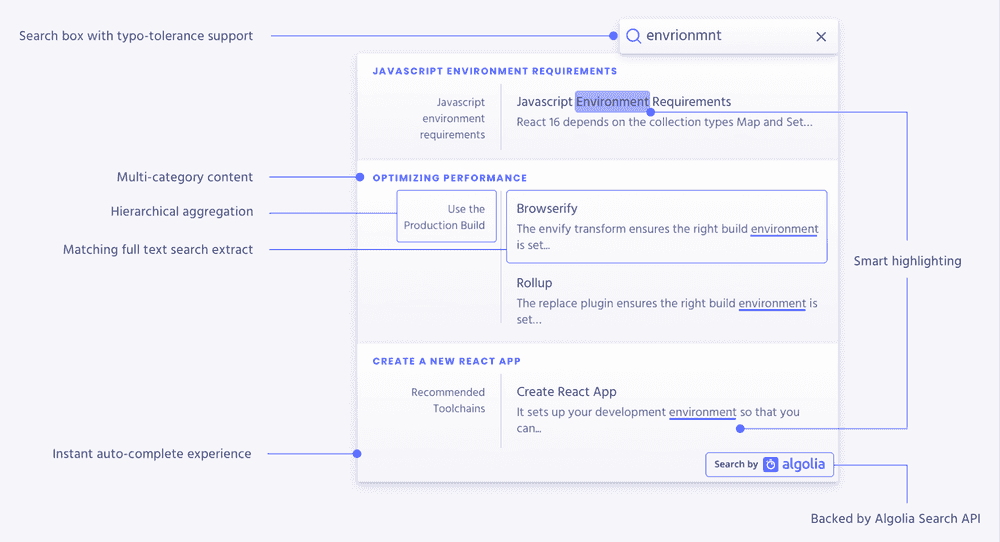

Here’s a breakdown of some of the key features of Algolia Docsearch from their product page.

How do these implementations work?

The big players in the search space like Google and Amazon use incredibly sophisticated techniques to make query suggestions for autocomplete and return relevant results. They do things like perform stemming and spelling corrections based on pronunciations which are then fed into a cascade of additional ML models to determine the intent of the searching behavior, the relationship to similar queries, and possible results. Once a query is selected, it depends on well-built indexes and smart techniques for using those indexes across enormous distributed datasets.

Design Decisions for my Search

My site has the huge benefit of not having much content, especially compared to all the web pages on the internet. This means I don’t need to do much to improve the relevance of the results. String matching with a little typo tolerance and ranking is plenty. I like the general UI of Mac Spotlight and Algolia with results grouped into categories and how matching strings are highlighted. For my site I wanted to start with searching across blog posts, expand it to tags, and then potentially to additional content types like project breakdown if I add them to my site in the future.

Typically searches performed on the web involve making a request to the server, which then returns results. This site is static, meaning it doesn’t make additional requests to the server for data beyond loading pages the first time. Static search then, like static sites, does not involve making a round trip to a server to request data after the page loads. This makes searches a lot quicker and the implementation simpler to add. The tradeoff here is that static searches are more limited in the amount of data they can index and handle. They are also slightly more limited in the complexity of a search implementation since all of the logic for performing searches is performed on the client. For my simple static blog and personal site I’ll never have the volume of data that would make this an issue. Start with the skateboard and KISS.

Possible solutions

Here’s the list of features I had narrowed down to:

- Fairly simple and easy to implement.

- Works with a static site, or at least doesn’t require me to manage a server.

- Performs typo tolerant string matching.

- Could potentially be used with an index across multiple content categories or multiple indexes.

I cast a fairly broad net here as well. I was able to quickly cross off the options I would need to manage, like any of the Apache Lucene derived searches - Solr and Elasticsearch. I also quickly nixed the search as a service provides including Algolia, swiftype, and searhcspring. These are all great options but work well for larger datasets than the one I’m dealing with.

Forming queries is a harder task for users than analyzing results.

I then took a look at search libraries that could be used on static sites. Lunr and js-search, Brian Vaughn alternative to Lunr, are good options here. They use the nearly ubiquitous tf-idf as a weighting factor for their indexes. They have handy utilities for improving the quality of the indexes like performing tokenization, stemming, removing stop words. These techniques are table stakes with the managed and SaaS options mentioned above but are nice to see on a library meant to run on the client (static). The biggest shortcoming I found when playing with these was the lack of typo tolerance. They could return the most relevant result but only with a correctly formed query. As someone with careless fingers, typo tolerance is a must-have.

Two other interesting options I looked at were Stork and tinysearch. Both of which are written in Rust and compiled to WebAssembly which helps reduce the size of the library code and improve the speed of accessing an index. Again these options lack typo tolerance. If you don’t need the ability to perform fuzzy searches or are willing to correct queries these could be other great options.

As far as fuzzy searching (approximate string matching) goes the popular JS libraries include fuse.js, flexsearch, fuzzaldrin, and fuzzaldrin-plus. These all work in slightly different ways; fuse uses a modified bitap algoritm (not bitmap) to score results. Flexsearch has an optional contextual index that is supposed to aid in the relevance and speed of multi-term queries. Fuzzaldrin and Fuzzaldrin-plus are both libraries designed for searching paths, methods, and other code-specific search tasks and inputs.

After an initial broad search for libraries based on their features, Fuse and Flexsearch seemed to be the two best choices. Beyond the feature set, I also looked at how well maintained and popular they are. Here’s a link to an npm trends comparison of these and the js libraries I already mentioned. Fuse has far and away the most popular with over 2 million weekly downloads and 11.3k GitHub stars at the time of writing this. Lunr is a distant second with a still respectable 500k weekly downloads and 7.1k GitHub stars.

From all of these data points, it felt like fuse was the choice that made the most sense for my desired requirements. It’s easy to implement, can be set up with minimal configuration, works well on the client-side, quickly returns results, supports fuzzy queries (approximate string matching), is well maintained, thoroughly documented, and popular. The most significant downsides to it seem to be that it will occasionally return false positives or results that don’t make sense. For example, when searching for ’react,’ it registers a partial hit on ’create’. This slight negative felt like an acceptable tradeoff.

Check out how the nuts and bolts of how I added a Fuse.js powered search to my site in part two on this series of posts on search.

References & Additional Resources

- 13 Design Patterns for Autocomplete Suggestions (27% Get it Wrong)

- Mobile UX Design: User-Friendly Search

- Best Practices for Search Results

- Design a Perfect Search Box

- 5 important things you need to consider when designing for search

- Designing Search

- Search interface: 20 things to consider

- Best UX Practices for Search Interface

- UX Psychology: Google Search

- Search UI Patterns: Elements